Content

Table of Contents

1. Background

Download files with large data volumes. For example, in operation audit, you need to download csv files with 250,000 data items, which is about 40+Mb.

In the previous download interface, the GPRC interface will report an error. The GRPC method in sync is queried at one time. The default transmission data size of GRPC is 4Mb, so a timeout error will be reported. Secondly, the amount of data downloaded is too large, and the transmission and data processing process need to be optimized.

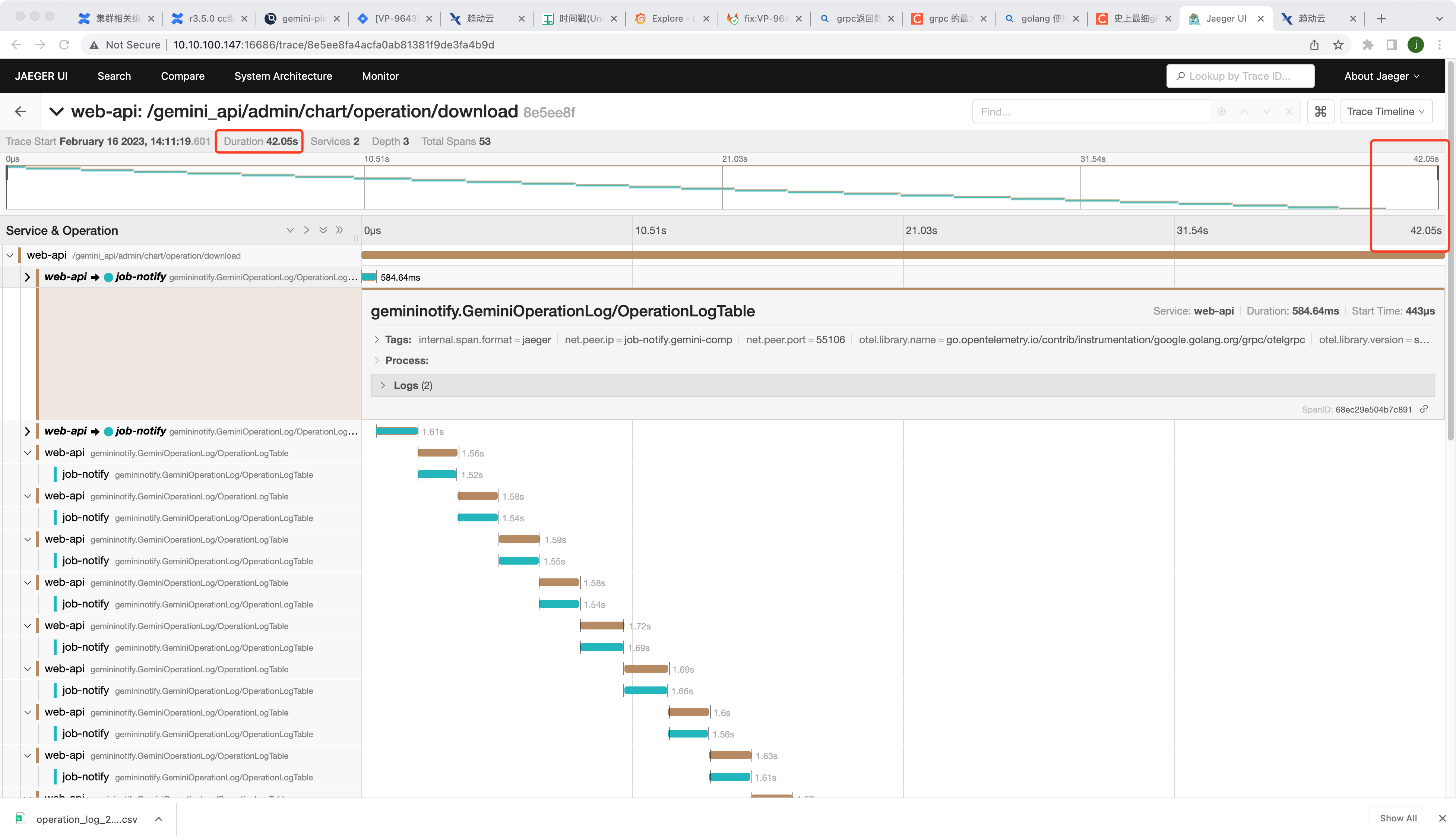

The first time, the bug of gRpc transmission was fixed. On the API side, a loop was used to query the request in the notify request. Using the paging function of MySQL, 10,000 data were queried at a time. It took about 42 seconds to download 250,000+ data. In the test environment, it may take about 60 seconds or longer:

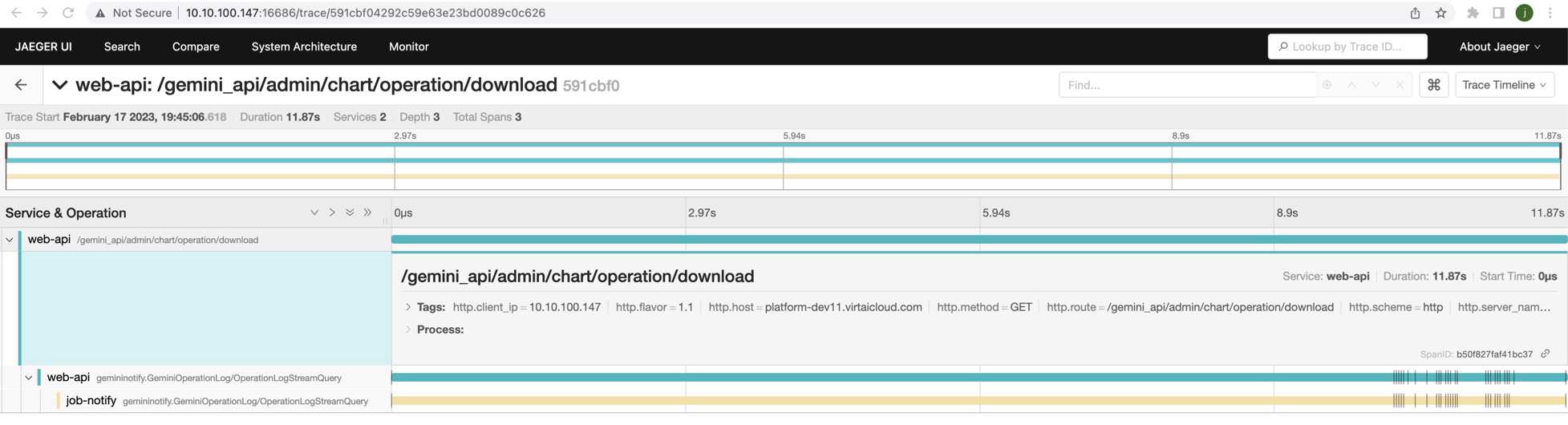

The second time, data processing was optimized and grpc streaming was adopted. After optimization, under the same development environment and data volume, the download interface only takes about 12 seconds, of which MySQL query takes about 10 seconds (based on the first data transmission time). Therefore, excluding the MySQL query time, the rest of the data processing and transmission time is about 2 seconds, which has been greatly optimized.

2. Optimization items

2.1 Query optimization

2.1.1 All indexed fields need to be indexed and take effect

The two fields, operate_time and space_name, are used in where without like, so they need to be indexed.

If the MySQL function of converting to unixtime is used in the query sql, the index will be invalid. For example, if a function such as “SELECT UNIX_TIMESTAMP(operat_time) => 1339123415” is used before, the index will be invalid.

Therefore, it is recommended that when storing in the database, the time field needs to be filtered and directly stored as unixTime, and add an index. In order to avoid the query interface, the queried data needs to be converted once, which consumes a lot of time. Therefore, the format of the operat_time field is consistent with the front-end display, which is a string format. Therefore, the query parameters need to be converted into a string format before querying operat_time:

localStartTime := time.Unix(in.StartTime, 0).Format("2006-01-02 15:04:05")

localEndTime := time.Unix(in.EndTime, 0).Format("2006-01-02 15:04:05")

tsql.Where("operate_time >= ? ", localStartTime).

And("operate_time <= ? ", localEndTime)

if in.UserName != "" {

tsql = tsql.And(`user_name like ? escape '/' `, "%"+EscapeString(in.UserName)+"%")

}

if in.SpaceName != "" {

tsql = tsql.And("space_name = ? ", in.SpaceName)

}

if in.OpContent != "" {

tsql = tsql.And(`operate_content like ? escape '/' `, "%"+EscapeString(in.OpContent)+"%")

}

if in.OpStatus != "" {

tsql = tsql.And(" operate_result = ? ", in.OpStatus)

}

if in.Sip != "" {

tsql = tsql.And(`client_ip like ? escape '/' `, "%"+EscapeString(in.Sip)+"%")

}

2.1.2 Index fields may fail when using ><query

According to experiments, if the query condition is used >< symbol, when the amount of query result data is not much different from the total amount of data, the index will not be used. The specific index principle needs further research.

2.2 grpc information transmission optimization

Use server-side one-way streaming RPC;

This method is suitable for processing grpc big data scenarios, and a large amount of data can be divided into blocks and transmitted to the API side through stream streaming.

For specific usage, please refer to an example:

https://github.com/pramonow/go-grpc-server-streaming-example

2.2.1 proto file

service GeminiOperationLog {

// grpc streaming return

rpc OperationLogStreamQuery (OperationLogTableRequest) returns (stream OperationLogStreamQueryReply);

}

message OperationLogStreamQueryReply{

bytes data = 1;

}

The return data only needs to contain data, and does not need to return code and msg;

2.2.2 Server-side processing

pb.go file generated by the server:

type GeminiOperationLogServer interface {

// Operation audit display query

OperationLogTable(context.Context, *OperationLogTableRequest) (*OperationLogTableReply, error)

// Operation audit drop-down query

OperationLogFilter(context.Context, *OperationLogFilterRequest) (*OperationLogFilterReply, error)

// grpc streaming return

OperationLogStreamQuery(*OperationLogTableRequest, GeminiOperationLog_OperationLogStreamQueryServer) error

mustEmbedUnimplementedGeminiOperationLogServer()

}

The input parameters and return values of streaming grpc are different from those of ordinary grpc.

Server-side implementation method:

// OperationLogStreamQuery provides grpc streaming download

func (c *OperationLogServer) OperationLogStreamQuery(in *pb.OperationLogTableRequest, server pb.GeminiOperationLog_OperationLogStreamQueryServer) error {

ctx := server.Context()

logsCtx := glog.WithCtx(ctx)

operation := "operation log table"

// Omit the processing logic. . .

// Query data table

var opLog []*model.OperationLog

err := tsql.Find(&opLog)

if err != nil {

gerr := notifyerr.NewErrDbError().SetCause(err)

logsCtx.Dynamic(gerr, operation)

return geminierror.WithI18n(ctx, gerr)

}

var msgSize = 10000

for left := 0; left < len(opLog); {

// Omit processing logic. . .

reply.Data = []byte(writer.String())

err = server.Send(reply)

if err != nil {

gerr := notifyerr.NewErrDbError().SetCause(err)

logsCtx.Dynamic(gerr, operation)

return geminierror.WithI18n(ctx, gerr)

}

}

return nil

}

The implemented server needs to define the server in the request parameters, such as:

func (s server) FetchResponse(in *pb.Request, server pb.StreamService_FetchResponseServer) error {

return nil

}

It should be noted that context can be passed from the api, but context does not need to be obtained in the server’s request parameters, but is encapsulated in the server’s interface:

type ServerStream interface {

// SetHeader sets the header metadata. It may be called multiple times.

// When call multiple times, all the provided metadata will be merged.

// All the metadata will be sent out when one of the following happens:

// - ServerStream.SendHeader() is called;

// - The first response is sent out;

// - An RPC status is sent out (error or success).

SetHeader(metadata.MD) error

// SendHeader sends the header metadata.

// The provided md and headers set by SetHeader() will be sent.

// It fails if called multiple times.

SendHeader(metadata.MD) error

// SetTrailer sets the trailer metadata which will be sent with the RPC status.

// When called more than once, all the provided metadata will be merged.

SetTrailer(metadata.MD)

// Context returns the context for this stream.

Context() context.Context

// SendMsg sends a message. On error, SendMsg aborts the stream and the

// error is returned directly.

//

// SendMsg blocks until:

// - There is sufficient flow control to schedule m with the transport, or

// - The stream is done, or

// - The stream breaks.

//

// SendMsg does not wait until the message is received by the client. An

// untimely stream closure may result in lost messages.

//

// It is safe to have a goroutine calling SendMsg and another goroutine

// calling RecvMsg on the same stream at the same time, but it is not safe

// to call SendMsg on the same stream in different goroutines.

SendMsg(m interface{}) error

// RecvMsg blocks until it receives a message into m or the stream is

// done. It returns io.EOF when the client has performed a CloseSend. On

// any non-EOF error, the stream is aborted and the error contains the

// RPC status.

//

// It is safe to have a goroutine calling SendMsg and another goroutine

// calling RecvMsg on the same stream at the same time, but it is not

// safe to call RecvMsg on the same stream in different goroutines.

RecvMsg(m interface{}) error

}

After dividing the data into blocks, you can use the server.Send() method to pass the data into the stream, and the send method also implements the SendMsg() method in the interface:

func (x *geminiOperationLogOperationLogStreamQueryServer) Send(m *OperationLogStreamQueryReply) error {

return x.ServerStream.SendMsg(m)

}

2.2.3 Client-side processing

The client interface generated in the pb.go file:

// For semantics around ctx use and closing/ending streaming RPCs, please refer to https://pkg.go.dev/google.golang.org/grpc/?tab=doc#ClientConn.NewStream.

type GeminiOperationLogClient interface {

// Operation audit display query

OperationLogTable(ctx context.Context, in *OperationLogTableRequest, opts ...grpc.CallOption) (*OperationLogTableReply, error)

//Operation audit drop-down query

OperationLogFilter(ctx context.Context, in *OperationLogFilterRequest, opts ...grpc.CallOption) (*OperationLogFilterReply, error)

//grpc streaming return

OperationLogStreamQuery(ctx context.Context, in *OperationLogTableRequest, opts ...grpc.CallOption) (GeminiOperationLog_OperationLogStreamQueryClient, error)

}

The stream interface does not return reply data, but a client interface, which contains the Recv() method and the standard clientStream:

type GeminiOperationLog_OperationLogStreamQueryClient interface {

Recv() (*OperationLogStreamQueryReply, error)

grpc.ClientStream

}

type ClientStream interface {

// Header returns the header metadata received from the server if there

// is any. It blocks if the metadata is not ready to read.

Header() (metadata.MD, error)

// Trailer returns the trailer metadata from the server, if there is any.

// It must only be called after stream.CloseAndRecv has returned, or

// stream.Recv has returned a non-nil error (including io.EOF).

Trailer() metadata.MD

// CloseSend closes the send direction of the stream. It closes the stream

// when non-nil error is met. It is also not safe to call CloseSend

// concurrently with SendMsg.

CloseSend() error

// Context returns the context for this stream.

//

// It should not be called until after Header or RecvMsg has returned. Once

// called, subsequent client-side retries are disabled.

Context() context.Context

// SendMsg is generally called by generated code. On error, SendMsg aborts

// the stream. If the error was generated by the client, the status is

// returned directly; otherwise, io.EOF is returned and the status of

// the stream may be discovered using RecvMsg.

//

// SendMsg blocks until:

// - There is sufficient flow control to schedule m with the transport, or

// - The stream is done, or

// - The stream breaks.

//

// SendMsg does not wait until the message is received by the server. An

// untimely stream closure may result in lost messages. To ensure delivery,

// users should ensure the RPC completed successfully using RecvMsg.

//

// It is safe to have a goroutine calling SendMsg and another goroutine

// calling RecvMsg on the same stream at the same time, but it is not safe

// to call SendMsg on the same stream in different goroutines. It is also

// not safe to call CloseSend concurrently with SendMsg.

SendMsg(m interface{}) error

// RecvMsg blocks until it receives a message into m or the stream is

// done. It returns io.EOF when the stream completes successfully. On

// any other error, the stream is aborted and the error contains the RPC

// status.

//

// It is safe to have a goroutine calling SendMsg and another goroutine

// calling RecvMsg on the same stream at the same time, but it is not

// safe to call RecvMsg on the same stream in different goroutines. RecvMsg(m interface{}) error

}

Client requests grpc and processes the return stream:

//Grpc to sync-notify to get data

// Fill in the request

ctx := c.Request.Context()

grpcReq := &gemininotify.OperationLogTableRequest{

RequestSource: &gemininotify.RequestSource{

RoleFrom: c.GetString("RoleFrom"),

SpaceId: c.GetString("SpaceId"),

UserId: int64(c.GetInt("UserId")),

},

}

// Access grpc to get data

var grpcRes gemininotify.GeminiOperationLog_OperationLogStreamQueryClient

err := grpcconn.WithGrpcClient(

&grpcconn.ConnParam{

DialType: grpcconn.DialType_DialCentralContext,

Address: config.Config.GeminiNotifyUrl,

Context: ctx,

},

func(conn *grpc.ClientConn) error {

client := gemininotify.NewGeminiOperationLogClient(conn)

var err error

grpcRes, err = client.OperationLogStreamQuery(ctx, grpcReq)

return err

},

)

// error handling

if err != nil {

geminiErr := geminierror.FromGrpcError(err)

logsCtx.Dynamic(geminiErr, operation) c.JSON(http.StatusOK, vm.GetReturnMsg(c, geminiErr))

return

}

// Receive grpc stream data

for {

resp, err := grpcRes.Recv()

if errors.Is(err, io.EOF) {

break

}

if err != nil {

geminiErr := geminierror.FromGrpcError(err)

logsCtx.Dynamic(geminiErr, operation)

c.JSON(http.StatusOK, vm.GetReturnMsg(c, geminiErr))

return

}

c.Writer.Write(resp.Data)

c.Writer.Flush()

}

When receiving the return value here, you can determine whether the stream is closed by the server by judging the returned error type, and jump out of the loop at the same time:

for {

resp, err := grpcRes.Recv()

if errors.Is(err, io.EOF) {

break

}

}

2.3 API-side optimization

Previous approach: Get MySQL data in sync, splice it into a structure array, then pass the large array of data to the API through the grpc interface, process the array on the API side, and then convert the array into []byte format in batches. In this process, the array is traversed twice, and a two-dimensional array is used when converting to []byte format, which consumes a lot of time. Therefore, in the scenario of extremely large data volumes, it is necessary to minimize the traversal of the array.

So under this background, the following optimizations were made:

First, on the API side, considering the large amount of data transmitted, the grpc interface is designed to directly transmit data in []byte format, and write directly to c.Writer after receiving the data:

////Before requesting grpc, first define the context header, define the file name and format, and write the fixed table data into the file

c.Writer.Header().Set("Content-type", "application/octet-stream")

// Convert to csv file

_, zoneOffset := time.Now().Zone() //This uses the current time zone. After internationalization, this should come from the time zone used when the user opens the interface.

fileName := "operation_log_" + statchart.TmString(zoneOffset, time.Now().Unix(), "2006-01-02-15_04_05") + ".csv"

c.Writer.Header().Set("Content-Disposition", "attachment; filename="+fileName)

csvBytes := make([]byte, 0)

// Add utf-8 bom header to prevent excel from opening

csvBytes = append(csvBytes, Utf8BomHeader...)

tableHeader := []string{"Operation time", "Name", "User name", "Operation content", "Space", "Source IP", "Operation status"}

csvBytes = append(csvBytes, []byte(strings.Join(tableHeader, ",")+"\r\n")...)

c.Writer.Write(csvBytes)

/////Start requesting grpc and get []byte data

// Receive grpc stream data

for {

resp, err := grpcRes.Recv()

if errors.Is(err, io.EOF) {

break

}

if err != nil {

geminiErr := geminierror.FromGrpcError(err)

logsCtx.Dynamic(geminiErr, operation)

c.JSON(http.StatusOK, vm.GetReturnMsg(c, geminiErr))

return

}

c.Writer.Write(resp.Data)

c.Writer.Flush()

}

2.4 grpc Server-side optimization

Hundreds of thousands of data items are processed at a rate of 10,000 each, and are transmitted to the grpc channel using the server.send() method. When processing strings, strings.builder is used to concatenate strings, and a long string is directly converted to a []byte type. In the csv file, “,” is the separator between characters. To prevent a single string from containing “,” itself, double quotes are used to wrap each item.

var msgSize = 10000

for left := 0; left < len(opLog); {

// Transmit every msgSize data

right := left + msgSize

if right > len(opLog) {

right = len(opLog)

}

reply := new(pb.OperationLogStreamQueryReply)

writer := strings.Builder{}

// Pre-allocate 5Mb

writer.Grow(5 << 20)

buf := make([]byte, 0, 5<<20)

for _, op := range opLog[left:right] {

buf = append(buf, op.OperateTime.Format("2006-01-02 15:04:05")...)

buf = append(buf, "\t,\""...)

// Add a suffix here\t Prevent excel from automatically converting the time format to 2023/2/14 23:58:21 when opening it

writer.WriteString(op.OperateTime.Format("2006-01-02 15:04:05"))

writer.WriteString("\t,\"")

writer.WriteString(op.DisplayName)

writer.WriteString(`","`)

writer.WriteString(op.UserName)

writer.WriteString(`","`)

writer.WriteString(op.OperateContent)

writer.WriteString(`","`)

writer.WriteString(op.SpaceName)

writer.WriteString(`","`)

writer.WriteString(op.ClientIp)

writer.WriteString(`","`)

writer.WriteString(op.OperateResult)

writer.WriteString("\"\r\n")

}

reply.Data = []byte(writer.String())

err = server.Send(reply)

if err != nil {

gerr := notifyerr.NewErrDbError().SetCause(err)

logsCtx.Dynamic(gerr, operation)

return geminierror.WithI18n(ctx, gerr)

}

left = right

}

2.4.1 Analysis of string concatenation performance and principle

On the grpc server side, directly concatenate the retrieved data into a string to avoid the performance consumption of traversing the array and converting strings to []byte for each row in the array scenario. However, when using “+” to concatenate strings, the performance becomes very poor. After a simple analysis of the reasons, it is found that using “+” to concatenate strings will have a large performance consumption.

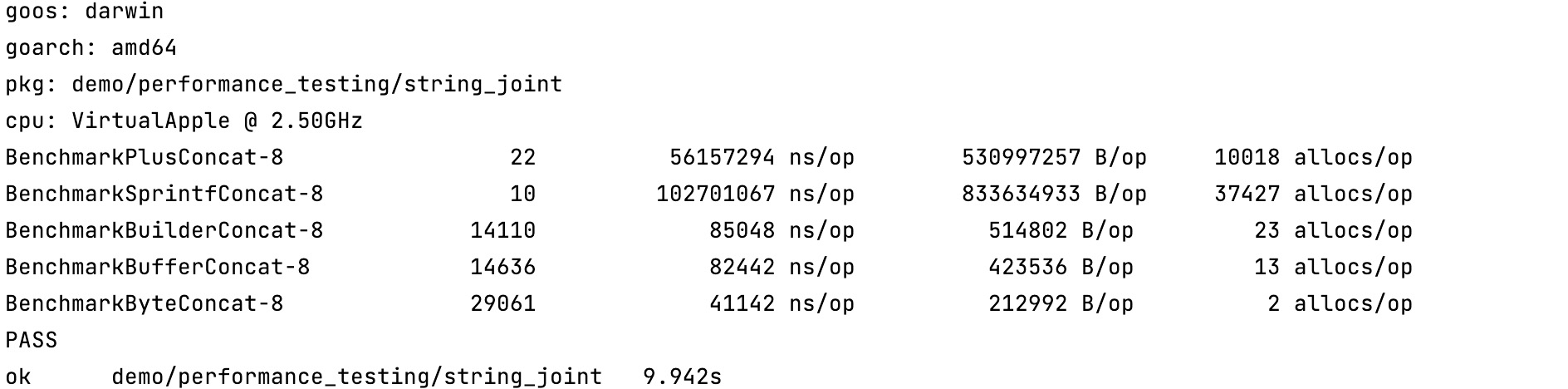

Do a small experiment using benchmark:

// use + sign

func plusConcat(n int, str string) string {

s := ""

for i := 0; i < n; i++ {

s += str

}

return s

}

// use sprintf

func sprintfConcat(n int, str string) string {

s := ""

for i := 0; i < n; i++ {

s = fmt.Sprintf("%s%s", s, str)

}

return s

}

//Use builder method

func builderConcat(n int, str string) string {

var builder strings.Builder

for i := 0; i < n; i++ {

builder.WriteString(str)

}

return builder.String()

}

//Use bytes.Buffer

func bufferConcat(n int, str string) string {

buffer := new(bytes.Buffer)

for i := 0; i < n; i++ {

buffer.WriteString(str)

}

return buffer.String()

}

// use byte

func byteConcat(n int, str string) string {

buf := make([]byte, 0, n*len(str))

for i := 0; i < n; i++ {

buf = append(buf, str...)

}

return string

g(buf)

}

The final result is as follows:

From top to bottom, five splicing methods were tested: plus sign, sprintf, builder method, buffer, and byte. The time and memory consumed by directly using the plus sign and the sprintf method are almost 1000 times that of the other three methods. The time consumed by the builder and buffer methods is almost the same. The builder method is often used, and memory can be allocated in advance.

Analyzing the reasons behind this phenomenon, the string type has the following description in the standard library builtin:

// string is the set of all strings of 8-bit bytes, conventionally but not

// necessarily representing UTF-8-encoded text. A string may be empty, but

// not nil. Values of string type are immutable.

type string string

The last sentence shows that the string type is immutable. How do you understand this sentence? From the bottom layer, the structure of string in golang is:

go1.18.8/src/runtime/string.go

type stringStruct struct {

str unsafe.Pointer

len int

}

//Instantiation

//go:nosplit

func gostringnocopy(str *byte) string {

ss := stringStruct{str: unsafe.Pointer(str), len: findnull(str)}

s := *(*string)(unsafe.Pointer(&ss))

return s

}

The str pointer points to the first address of an array. From the instantiation example, we can see that the str pointer is a pointer to byte, so we can determine that the underlying data structure of string is a byte array. This also explains that in the fifth byte method, []byte can be directly concatenated with string…

For []byte, the following operations are possible:

b := []byte("Hello Gopher!")

b [1] = 'T'

For string, modification operations are prohibited:

s := "Hello Gopher!"

s[1] = 'T'

But string can support such operations:

s := "Hello Gopher!"

s = "Tello Gopher!"

That is to say, the content of the address pointed to by the pointer of the string structure str cannot be modified, but the pointer can point to a different address, that is, each time the string is modified, the memory needs to be reallocated once. Therefore, when using the plus sign or sprint, each added string is actually a new string. As the amount of data increases, it is also necessary to re-apply for a larger memory, and it is necessary to execute the gc of the previously allocated memory, so the number of memory allocations increases by a thousand times.

2.4.2 Use builder to concatenate strings

go1.18.8/src/strings/builder.go

The builder.WriteString method is mainly used in the code. After checking the source code, it is found that it is consistent with the fifth fastest method in the benchmark test, which directly appends string to []byte. This method has an additional pointer address check mechanism to prevent pointer escape.

// A Builder is used to efficiently build a string using Write methods.

// It minimizes memory copying. The zero value is ready to use.

// Do not copy a non-zero Builder.

type Builder struct {

addr *Builder // of receiver, to detect copies by value

buf[]byte

}

func (b *Builder) WriteString(s string) (int, error) {

b.copyCheck()

b.buf = append(b.buf, s...)

return len(s), nil

}

func (b *Builder) copyCheck() {

if b.addr == nil {

// This hack works around a failing of Go's escape analysis

// that was causing b to escape and be heap allocated.

// See issue 23382.

// TODO: once issue 7921 is fixed, this should be reverted to

// just "b.addr = b".

b.addr = (*Builder)(noescape(unsafe.Pointer(b)))

} else if b.addr != b {

panic("strings: illegal use of non-zero Builder copied by value")

}

}

2.4.3 Analysis of the principle of converting string to []byte

The previous solution was to convert each string to []byte type using the standard method of []byte() for the two-dimensional array to be generated. However, this method involves copying the underlying array each time and requires millions of conversions, which consumes a lot of memory and time. Therefore, the code chooses to concatenate 10,000 data items into a large string each time, and then converts it to []byte using the standard method. Although the processing speed is sufficient to meet the needs in the current scenario, it seems that there is still room for optimization. Therefore, here are some principles of converting string to []byte:

- Standard conversion

Standard conversion is the implementation of []byte(string).

src/runtime/string.go

func stringtoslicebyte(buf *tmpBuf, s string) []byte {

var b[]byte

if buf != nil && len(s) <= len(buf) {

*buf = tmpBuf{}

b = buf[:len(s)]

} else {

b = rawbyteslice(len(s))

}

copy(b, s)

return b

}

// rawbyteslice allocates a new byte slice. The byte slice is not zeroed.

func rawbyteslice(size int) (b []byte) {

cap := roundupsize(uintptr(size))

p := mallocgc(cap, nil, false)

if cap != uintptr(size) {

memclrNoHeapPointers(add(p, uintptr(size)), cap-uintptr(size))

}

*(*slice)(unsafe.Pointer(&b)) = slice{p, size, int(cap)}

return

}

// The copy built-in function copies elements from a source slice into a

// destination slice. (As a special case, it also will copy bytes from a

// string to a slice of bytes.) The source and destination may overlap. Copy

// returns the number of elements copied, which will be the minimum of

// len(src) and len(dst).

func copy(dst, src []Type) int

The mallocgc() method will be called to allocate a new memory block, and then copy the string to []byte.

- Forced conversion

In go, any type of pointer *T can be converted to a pointer of type unsafe.Pointer, which can store the address of any variable. At the same time, the pointer of type unsafe.Pointer can also be converted back to a normal pointer, and it does not have to be the same as the previous type *T. In addition, the unsafe.Pointer type can also be converted to the uintptr type, which stores the value of the address pointed to by the pointer, so that we can perform numerical calculations on the address. The above is the implementation basis of the strong conversion method

func String2Bytes(s string) []byte {

sh := (*reflect.StringHeader)(unsafe.Pointer(&s))

bh := reflect.SliceHeader{

Data: sh.Data,

Len: sh.Len,

Cap: sh.Len,

}

return *(*[]byte)(unsafe.Pointer(&bh))

}

func Bytes2String(b []byte) string {

return *(*string)(unsafe.Pointer(&b))

}

However, the performance improvement in type conversion is not very large, but this method of using unsafe.Pointer will increase security risks. Directly modifying the value of the underlying array may cause serious errors, and it cannot be obtained through defer, which is difficult to troubleshoot.

Therefore, to summarize the above optimization for strings, using the strings.builder method to concatenate strings, and then using the standard conversion method to convert the string to []byte is the best solution.

2.5 Download optimization

In the future, you can consider asynchronous downloading and prompt users on the front-end interface to obtain the file later.

3. Problem

- After clicking download, the download pop-up box cannot be popped up immediately; the response header is not returned immediately, and you need to add a log to see where the request is pending.

No good solution has been found. After adding logs and observing, it seems that it is necessary to wait for grpc to return before the pop-up box will appear. It may be necessary to further study the protocol in the future to determine which parameters are related to the timing of the pop-up box.

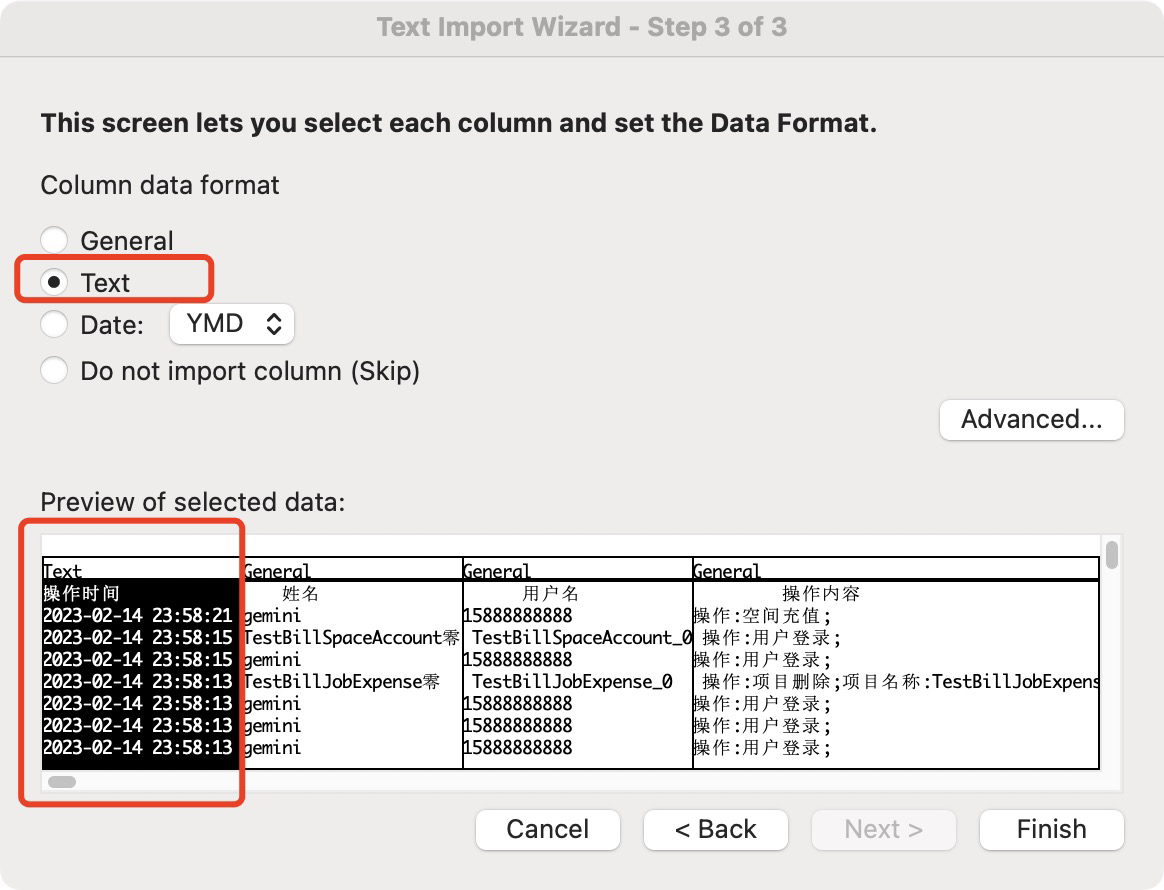

- The date format of the csv file is 2023-02-14 23:58:15. If you open it with Excel, it will automatically be converted to 2023/2/14 23:58:21.

You can refer to this link: https://www.winhelponline.com/blog/stop-excel-convert-text-to-number-date-format-csv-file/

Solution 1: When users use Excel to open the csv file, select the text format in the time item;

Solution 2:

You can format the .csv file in such a way that opening it normally in Excel (not importing via the Data tab) doesn’t transform the data format from text to number or date. For example, prefix the date and roll number fields with "=" and suffix them with two double-quotes (""), like below:

"steve","=""011""","=""10-Jan-2012""","Jungle Road, Leominster MA 1453"

instead of:

"steve","011","10-Jan-2012","Jungle Road, Leominster MA 1453"

However, this method will add extra characters after clicking the content =”2023-02-16 11:50:16”

Solution 3:

Adding \t after the time string can prevent it from being converted to date format. This is the current approach.